In 2024 alone, AI wrote over 256 billion lines of code. Let that sink in. According to Statista, 41% of all ssoftware code is now AI generated code. That number’s only going up—and so are the risks.

AI code is fast. Sometimes too fast. We’re now seeing a 10X spike in exposed API endpoints lacking basic authorization and input validation. And here’s the kicker: bad AI code isn’t just a one-time bug. If it lands in an open-source repo, it becomes training data for future AI models, spreading those vulnerabilities even further.

So, what can we do? We make sure every line—human or machine—is scanned and secured.

That’s where AI SAST comes in: Static Application Security Testing built for developers working in the age of LLMs, Copilot, and autopilot coding.

What’s the Problem With AI Generated Code?

AI generated code looks good. It compiles. It runs. It even comes with comments. But under the hood, it often skips:

- Authentication and access control

- Input validation and sanitization

- Secrets handling (e.g., hardcoded tokens)

- Error handling and edge case logic

- Safe use of libraries and APIs

That’s not because AI is malicious—it just doesn’t understand your threat model. And it’s being used more than ever, often with no review at all.

Real-World Example of Vulnerability in AI Code

Let’s say Copilot generates this in an Express.js app:

app.post('/submit', (req, res) => {

db.insert(req.body)

res.send('OK')

})

It looks fine—until you realize there’s no input validation or sanitization. In a production context, that’s a fast track to XSS or injection vulnerabilities.

What Is AI SAST?

AI SAST isn’t about securing the AI itself. It’s about securing the AI code —the stuff being generated, copied, pasted, and committed in to your app.

It works like traditional SAST: scans source code before it runs, flags vulnerabilities, suggests fixes. But it’s built with modern, AI-assisted workflows in mind.

Some platforms are even experimenting with AI and ML to improve pattern recognition and reduce false positives. But here, our focus is simple: use static analysis to secure code written by AI tools.

Think of it as your reality check. Because “it compiles” isn’t the same as “it’s secure.”

How AI SAST Secures AI Code in Your Workflow

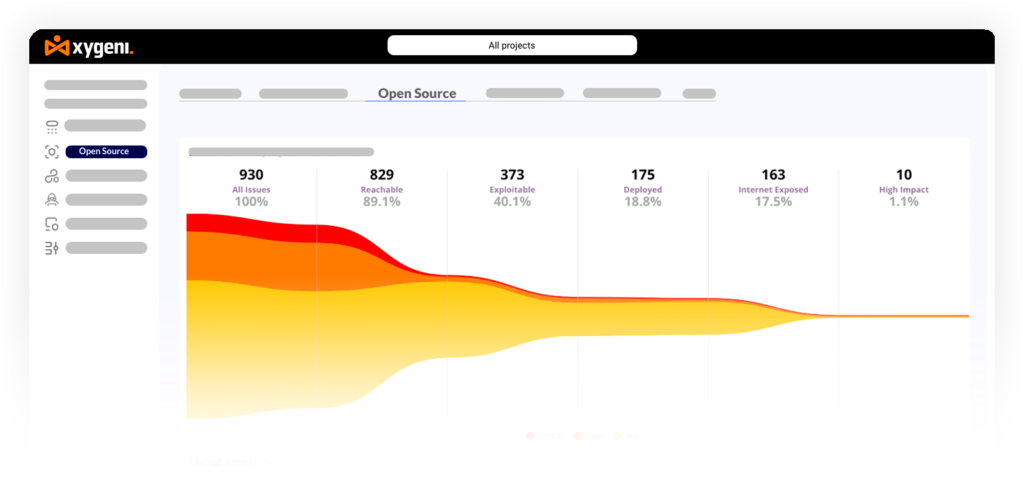

Xygeni Code Security is purpose-built for modern development environments—fast, automated, and connected across your toolchain.

You get:

- A native SAST scanner for real-time static analysis of your codebase

- Support for malicious code detection in source code

- Seamless third-party scanner integration—so you’re not locked in or forced to switch

Already using SonarQube, Snyk, Checkmarx, or Veracode? No problem—Xygeni can import their findings and centralize everything under one clean dashboard. That means better visibility, smarter prioritization, and no duplicated effort—especially when you’re dealing with the unpredictable risks of AI code.

Inside the Xygeni Console

Head to Code Security → Risks (SAST) and you’ll see:

- All code vulnerabilities—Xygeni and third-party

- Line-level issue details and remediation advice

- Filters by severity, tool, and workflow step

- Malicious code evidence

- Support for custom rules, so you can tune it to your codebase

Bottom line: it doesn’t just tell you “something’s wrong.” It shows you what, where, and why—and how to fix it.

Pro Tips for Using SAST in AI-Heavy Projects

Here’s how to make the most of AI SAST without disrupting your flow:

- Set it to fail your build if high/critical issues are found.

Run scans on PRs, not just nightly or weekly builds. - Combine SAST with secrets scanning and IaC detection for full-stack coverage.

- Review default AI snippets—especially anything dealing with auth, file I/O, or DB access.

- Ignore nothing—even low-severity issues can escalate fast depending on how the app evolves.

Wrapping Up: SAST Isn’t Sexy, But It Works

Look—we all want to ship fast. But fast without secure is how breaches happen. AI SAST is your way to speed up without screwing up.

It’s not about being paranoid. It’s about being practical.

Use the tools. Automate the scans. Treat AI code like third-party code: untrusted until proven safe.

Ready to see what your AI-generated code is hiding?

Start a free 14-day trial – No credit card. No noise. Just clean, secure code.