Vibe coding is reshaping how software is built, and AI Vibe Coding Security is rapidly becoming a structural requirement for modern DevSecOps teams. Developers increasingly rely on copilot-style suggestions, agentic assistants, and MCP-connected tooling to accelerate delivery. As a result, the cycle from ideation to commit is compressed, change volume increases, and AI systems begin to participate directly in repository, dependency, and CI/CD decisions.

This acceleration introduces a security tradeoff. Traditional Application Security (AppSec) programs were designed for environments where most code was human-authored, diffs were incremental, and validation could be layered onto artifacts through SAST, SCA, and periodic review. Under vibe coding, however, risk often emerges before the artifact stage: inside prompts, retrieved context, tool invocation chains, and autonomous execution paths.

The implication is practical: security assurance must expand from artifact scanning to workflow governance.

What is vibe coding Security?

In this context, vibe coding describes an AI-augmented development workflow where engineers focus on intent while AI systems generate, refactor, and operationalize changes across code and delivery infrastructure.

The shift is not merely about speed. It is about relocating decision-making into a probabilistic control plane. Prompts influence model interpretation. Retrieved context influences outputs. Tool connectors may translate output into repository changes, pipeline modifications, or infrastructure updates.

The effective execution chain becomes:

Prompt → Model Interpretation → Tool Invocation → Artifact Generation → Deployment

Each step can cross a trust boundary.

OWASP’s guidance for LLM applications and agentic systems highlights risks such as prompt injection, insecure output handling, and unsafe tool execution. These risks are not confined to “AI applications”, they directly map to AI-assisted development workflows where model output becomes executable behavior.

The New Risk Landscape: AI Changes the Failure Mode

AI-assisted development compresses decision cycles and expands the effective attack surface across code, dependencies, infrastructure, and operations.

The risk is socio-technical. Failures may originate in:

- Model behavior

- Tool integrations

- Over-permissive connectors

- Human overreliance on plausible output

This framing aligns with the NIST AI Risk Management Framework (AI RMF), which treats AI risk as lifecycle-based and system-wide rather than component-specific. The AI RMF emphasizes governance, traceability, continuous evaluation, and measurable controls across the AI lifecycle.

At the code layer, AI can generate syntactically valid output that embeds subtle semantic flaws: missing authorization boundaries, unsafe parsing assumptions, insecure defaults. Industry analyses of AI-generated code have shown elevated rates of logic and security defects compared to purely human-authored changes, particularly in memory-unsafe languages.

At the supply chain layer, AI may recommend libraries without strong provenance guarantees. Sonatype’s reporting on malicious open-source packages demonstrates the growing scale of typosquatting, dependency confusion, and poisoned updates. In a vibe coding environment, dependency ingestion accelerates.

At the infrastructure layer, AI-generated IaC can confidently introduce permissive network paths, over-broad IAM roles, or insecure pipeline steps. CISA’s secure cloud baseline guidance emphasizes codified configuration enforcement precisely because misconfiguration remains one of the highest-frequency failure modes.

Taken together, AI does not merely increase vulnerability volume. It shifts where risk originates and how it propagates.

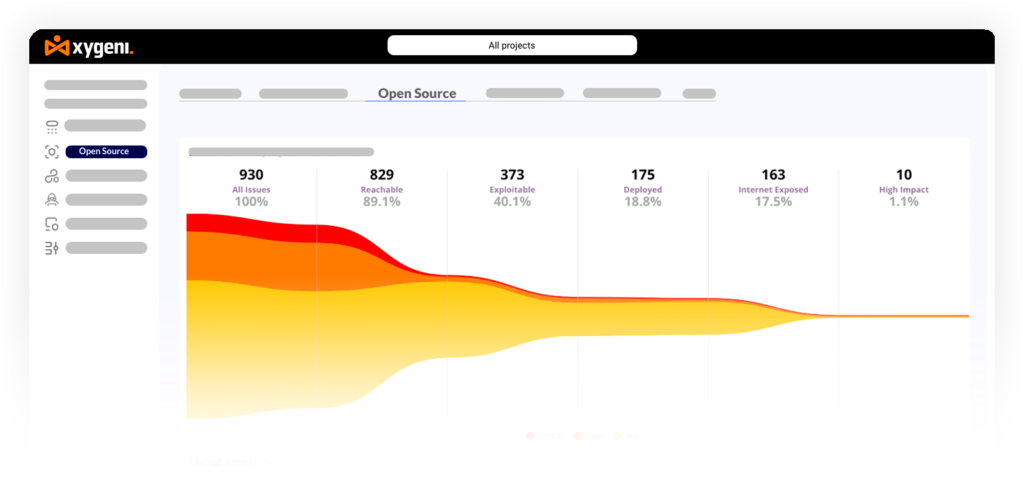

Source: Xygeni Security. All Rights Reserved

How Vibe Coding Security Exposes Hidden Pipeline Risk

In many organizations, AppSec coverage becomes synonymous with SAST plus SCA.

However, that model assumes risk is visible in static artifacts.

Consider a mature DevSecOps setup:

- SAST runs on every pull request

- SCA checks dependencies against known CVEs

- IaC scanning validates Terraform and Kubernetes manifests

- Secrets detection blocks obvious credential leaks

Everything appears compliant.

Now introduce vibe coding. A developer asks an AI assistant to optimize the CI pipeline. The assistant modifies the workflow to pull a remote script for faster caching. The change is syntactically valid. No CVE is introduced. No secret is exposed.

The pipeline remains green.

Yet the remote script executes with the runner’s permissions. If the runner has access to deployment credentials or artifact signing keys, the system has effectively expanded its attack surface without any signature-based alert.

This is not a classic vulnerability. It is an unsafe decision-to-action path.

OWASP’s MCP security guidance explicitly warns that tool connectors represent high-risk trust boundaries requiring least privilege and governance workflows.

Traditional artifact scanning does not model this class of failure.

SOURCE:NIST AI RESOURCE CENTER

Why Traditional AppSec Breaks Without Vibe Coding Security Controls

Traditional AppSec tools remain necessary. They do not become obsolete. However, they were never designed to evaluate:

- AI-driven logic propagation

- Prompt injection risk in development workflows

- Unsafe tool invocation

- Pipeline integrity drift

- Artifact provenance gaps

SAST struggles with context-dependent business logic vulnerabilities. OWASP’s Web Security Testing Guide acknowledges that business logic flaws require workflow-level understanding. AI can replicate flawed logic at scale before such flaws are detected.

SCA detects known vulnerable components. It does not verify intent, provenance, or malicious insertion without CVE signatures. NIST’s Secure Software Development Framework (SSDF) emphasizes maintaining provenance and traceability precisely because integrity is not guaranteed by vulnerability enumeration alone.

Agentic systems intensify the problem. When assistants can open pull requests, modify repositories, adjust CI permissions, or trigger deployments, misuse may never present as a code defect. It manifests as a capability control failure.

This is why AI Vibe Coding Security reframes the question from:

“Is this artifact vulnerable?”

to:

“Can we trust how this artifact was produced?”

Supply-chain integrity frameworks such as SLSA emphasize build provenance and tamper resistance as foundational controls. Provenance answers where, when, and how an artifact was produced. Under vibe coding, provenance becomes a primary assurance mechanism, not a compliance checkbox.

What to Do Instead: A Workflow-Centric Security Model

The white paper proposes a practical operating model aligned to both OWASP guidance and NIST AI RMF:

1. Govern AI Tooling

Explicitly define which AI assistants, connectors, and MCP servers are permitted. Apply least privilege. Require review for high-sensitivity domains such as authentication, cryptography, IAM, and CI/CD configuration.

2. Identify Risk Continuously

Map risk across code, dependencies, infrastructure, and tool calls. Treat prompts and context as control surfaces. Monitor supply-chain ingestion in real time.

3. Validate & Measure

Integrate SAST, SCA, IaC scanning, and secret detection into IDE and pull request workflows. Extend telemetry to agent behavior and tool invocation patterns. Align measurement with NIST AI RMF lifecycle functions.

4. Protect & Enforce

Fail closed when provenance is missing. Enforce build attestation. Require dependency source controls. Monitor CI/CD for drift and anomalous execution. Block high-risk artifacts before deployment.

CISA’s Known Exploited Vulnerabilities (KEV) Catalog reinforces why exploit intelligence must complement static severity scoring. Prioritization must align with active attacker behavior, not theoretical risk.

In short, enforcement must be deterministic at AI velocity.

Business and Regulatory Implications

AI-assisted development is no longer a niche engineering experiment. It intersects directly with regulatory expectations.

The NIS2 Directive requires cybersecurity risk management across development, supply chain, and incident handling.

The Digital Operational Resilience Act (DORA) mandates ICT risk management, resilience testing, and third-party governance in the financial sector.

NIST AI RMF and its Generative AI Profile further emphasize governance, traceability, and continuous monitoring.

Organizations that treat vibe coding as unmanaged convenience risk regulatory friction, audit failures, and increased breach cost exposure.

Conclusion: The Future of AppSec Depends on Vibe Coding Security

AI coding is inevitable.

Unmanaged AI coding is optional.

Traditional AppSec remains foundational. However, under vibe coding, artifact scanning alone cannot guarantee security. The failure mode has shifted from isolated defects to decision-chain integrity.

AI Vibe Coding Security requires:

- Governance over AI capability

- Continuous lifecycle risk management

- Provenance and build integrity enforcement

- Least-privilege tool execution

- Workflow-level visibility

Securing code is no longer sufficient.

Securing the workflow, from prompt to deployment, is the real frontier.

Download the Full White Paper

About the Author

Marcos Martín is a cybersecurity engineer focused on AI-driven application security, secure SDLC design, and software supply chain integrity. His work centers on bridging modern DevSecOps practices with emerging risks introduced by AI-assisted development, agentic systems, and automated CI/CD environments.

Marcos contributes research and practical guidance on AI Vibe Coding Security, workflow assurance, and build integrity models aligned with frameworks such as NIST AI RMF, OWASP LLM and Agentic guidance, and SLSA.