Artificial Intelligence is transforming cybersecurity. It enables faster threat detection, smarter automation, and better decision-making. Yet while AI improves protection, it also introduces new vulnerabilities. Understanding AI security, AI in cyber security, and AI security risks is essential to build safe, reliable systems.

Modern applications rely on AI models to generate code, analyze data, or detect anomalies. However, these models can be tricked, poisoned, or misused. Attackers exploit AI systems just like any other software component, turning innovation into an attack surface. That is why securing AI is now a priority for every DevSecOps team.

What Is AI Security and Why It Matters

AI security focuses on protecting the models, data, and infrastructure that power artificial intelligence. It differs from traditional cybersecurity because it must address how AI learns, behaves, and interacts with users and external systems.

In simple terms, AI in cyber security helps defend applications, while AI security protects the AI itself. The goal is to keep models trustworthy, prevent data leaks, and stop manipulation of prompts or predictions.

As Gartner warns, over half of future AI incidents will exploit access control weaknesses through prompt injection or data exposure. This shows that securing AI systems requires both governance and real-time visibility.

The Expanding Risk Surface of AI Systems

Every AI model connects to multiple layers: data sources, APIs, pipelines, and users. Each layer can introduce risk. Some of the most common AI security risks include:

| AI Risk Type | Description | Potential Impact |

|---|---|---|

| Prompt Injection | Attackers insert hidden or malicious instructions into prompts to alter model behavior. | Unauthorized model actions, data exfiltration. |

| Data Leakage | Sensitive or proprietary data is unintentionally exposed through model outputs or logs. | Privacy loss, exposure of intellectual property. |

| Model Poisoning | Malicious training data modifies model behavior or introduces backdoors. | Manipulated predictions, degraded accuracy, corrupted models. |

| API or MCP Misconfiguration | Weak authentication or unvalidated model connectors allow external misuse. | Unauthorized access, data leaks, compromised integrations. |

| Access Control Gaps | Overly permissive API keys or missing validation controls for AI services. | Privilege escalation, misuse of resources, exposure of sensitive functions. |

Misconfigured API keys or unvalidated model connectors (like MCP integrations) often become gateways for unauthorized access or data leakage. These AI security risks can easily reach CI/CD pipelines, where insecure integrations or exposed tokens compromise entire workflows. Therefore, building protection around every AI layer is fundamental for resilient systems.

How AI Security Is Evolving in Modern DevSecOps

AI security is moving earlier in the software lifecycle. Instead of waiting until production, security now begins at code creation, dependency selection, and model integration. This “shift left” mindset is critical for AI in cyber security because risks often appear long before deployment.

AI Security Testing (AI-ST) focuses on identifying weaknesses such as prompt injection, model inversion, or data poisoning before models are used in production. It helps developers verify that AI code, datasets, and connectors behave safely and comply with internal security rules.

Xygeni supports this proactive approach through continuous scanning, policy guardrails, and automated remediation workflows. Its ASPM platform unifies code analysis, dependency monitoring, and configuration checks, helping teams detect and fix AI security risks early in development.

By embedding security into the CI/CD process, organizations can catch vulnerabilities before they propagate, ensuring that AI-powered features remain reliable, auditable, and compliant from the start.

Securing AI Workflows with Xygeni’s ASPM Platform

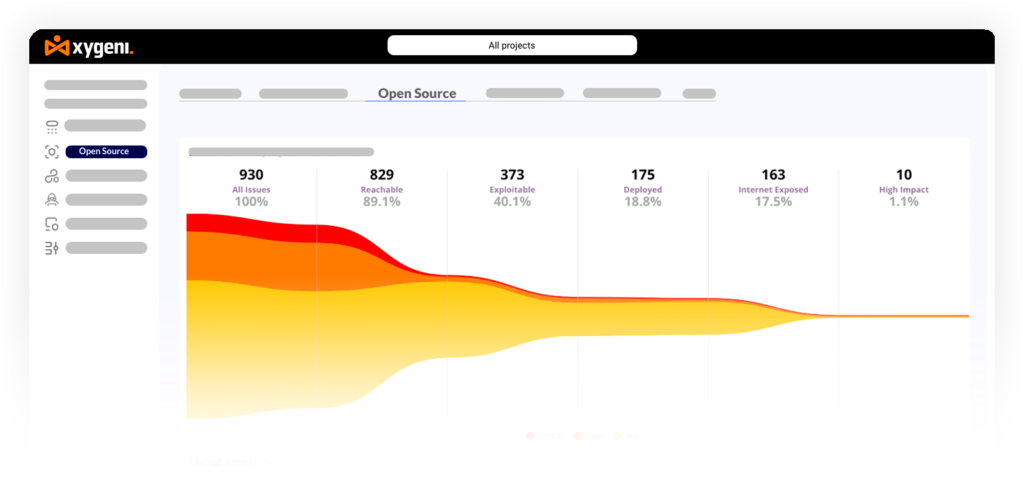

Xygeni extends these protection layers across the software supply chain. Its Application Security Posture Management (ASPM) platform unifies risk visibility from code to cloud, making it easier to identify and prioritize AI-related vulnerabilities.

With dynamic prioritization funnels, Xygeni filters findings by severity, exploitability, and business impact, helping teams focus on real risks instead of noise. Version 5.28 introduces new Guardrails that perform local and server-side rule evaluations, ensuring consistent policy enforcement across repositories, even those containing AI-generated or AI-assisted code.

This level of control helps developers integrate AI safely while maintaining compliance and development speed.

From Detection to Fix: How Xygeni Handles AI Security Risks

When a critical finding related to AI security appears, the remediation flow is straightforward: detect with policies, prioritize with context, and fix automatically.

- The scan detects a prompt injection in a connector; the policy flags it as blocking.

- The Prioritization Funnel ranks the issue by severity and reachability.

- Xygeni Bot creates a pull request with the suggested fix; the reviewer approves or adjusts it.

- Guardrails verify the fix both locally and server-side; only compliant code can merge.

- AI Auto-Fix with your custom model strengthens the patch before release.

This workflow turns AI in cyber security from theory into daily practice.

AI Risk Prioritization Matrix

| Signal | How to Evaluate | Recommended Action |

|---|---|---|

| Exploitability | Is the vulnerability reachable through user-controlled input? | Raise priority; review input validation and prompt filters. |

| Asset Criticality | Does the model handle sensitive data or privileged APIs? | Apply blocking Guardrails; require manual approval. |

| Blast Radius | Could misuse of one connector affect multiple services? | Segment scopes; rotate credentials; limit connector access. |

| Regression Risk | Would an upgrade introduce breaking changes? | Use Xygeni’s Remediation Risk to choose a safe version. |

Practical Guardrails for AI Security

<pre><code>{

"policies": [

{ "id": "ai.mcp.restrict.origins", "rule": "mcp_allowed_origins in ['internal://tools','local://workspace']", "mode": "block" },

{ "id": "ai.api.keys.scoped", "rule": "api_key.scope in ['inference','readonly'] and api_key.expiry_days <= 30", "mode": "warn" },

{ "id": "prompt.inputs.sanitize", "rule": "input.prompt.validated == true and input.size_kb <= 64", "mode": "block" }

]

}</code></pre>

These Guardrails apply both locally and on the server, ensuring that AI security policies are enforced inside CI and across repositories. They bring transparency and repeatability to AI in cyber security, turning governance into code.

Xygeni Bot: Automated Remediation for a Secure AI Era

Automation has become essential for modern security operations. The Xygeni Bot adds automation directly into the remediation workflow for SAST, SCA, and Secrets findings.

Teams can define how and when fixes are applied:

- On demand for manual control

- On every pull request to keep branches clean

- On a daily schedule for continuous upkeep

The bot automatically generates pull requests with recommended fixes. Developers only need to review and merge. This continuous loop ensures that vulnerabilities are fixed early, reducing security debt and maintaining cleaner pipelines without disrupting work.

AI Auto-Fix with Customer Models: Privacy Meets Automation

AI-driven remediation takes automation further. With Version 5.28, AI Auto-Fix allows organizations to use their own AI models for code remediation. Supported providers include OpenAI, Google Gemini, Anthropic Claude, Groq, and OpenRouter.

Instead of sending code to external servers, teams can connect the CLI directly to their configured model, keeping source data fully private. They can also execute unlimited fixes, aligning automation with their governance and privacy requirements.

This approach gives companies flexibility and control while accelerating the remediation process. It also ensures that AI assistance strengthens security without exposing sensitive assets.

Real Applications of AI Security in Cyber Defense

AI security is not only about protecting AI models. It also helps organizations defend their systems and pipelines better. Today, many security teams use AI in cyber security to analyze logs, find strange behavior, and rank vulnerabilities based on how easy they are to exploit.

At the same time, Xygeni uses AI safely within its own platform. With tools such as reachability analysis, EPSS-based scoring, and auto-remediation, Xygeni helps teams make smarter and faster decisions. As a result, AI security becomes part of daily work, not a separate task.

In addition, this approach makes AI a trusted helper instead of a hidden risk. It brings more visibility and control to the software development process, helping teams act faster when problems appear.

Best Practices for AI Security in Development

Keeping AI safe requires teamwork and attention to detail. Developers can protect their pipelines by following these simple steps:

- Keep a list of all AI models, endpoints, and connectors.

- Limit access to sensitive APIs and prompts with the least privilege needed.

- Check and clean inputs before sending them to any model.

- Watch the outputs to spot strange or risky results.

- Use ASPM tools to see all risks in one place and apply security rules automatically.

By following these steps, teams can reduce AI security risks, stop leaks, and avoid data misuse. These habits also make it easier to keep control as AI tools become part of more projects.

Checklist: Ready-to-Ship Secure AI

Before you release your project, check that you have:

- A full list of all AI models, endpoints, and connectors

- Guardrails for MCP and API keys set to “block”

- Pull request scans with Xygeni Bot and daily runs for older findings

- Auto-Fix using your own model for private code fixes

- A Remediation Risk review before any dependency update

- Follow recognized guidelines such as the ENISA Guidelines on Securing AI to align your process with trusted industry practices.

Following this checklist makes AI security a regular part of development, not something you do at the end. It helps teams ship safer software with less effort.

Quick FAQ

What is AI security in simple terms?

It’s the protection of AI models, data, and pipelines against manipulation, leakage, or misuse.

How does AI in cyber security change DevSecOps?

It adds automation, predictive prioritization, and context awareness to every security step.

Which AI security risks should teams fix first?

Those that are exploitable, high-impact, and reachable, especially prompt injection and data leakage.

Final Thoughts: Secure AI by Design

AI has become a vital part of modern development. However, innovation must go hand in hand with security. Protecting AI models, connectors, and data pipelines ensures that the benefits of automation do not come with new vulnerabilities.

By combining AI security testing, runtime defense, and ASPM, organizations can prevent attacks before they escalate. With Xygeni Bot, AI Auto-Fix, and Guardrails, teams can automate remediation and governance without losing control or speed.

AI is powerful, but only secure AI can truly transform how we build and protect software.