On February 25, 2025, Apache released a security advisory for CVE-2025-30065, a critical remote code execution (RCE) flaw in Apache Parquet, with direct impact on Parquet Avro. This vulnerability (CVSS 9.8) allows attackers to craft malicious Parquet files that, once parsed, can trigger arbitrary code execution.

In practice, any team that ingests or processes untrusted Parquet files in big data pipelines, ML workflows, or CI/CD ingestion jobs is at risk.

Immediate actions you should take:

- Patch quickly to the fixed Apache Parquet release.

- Audit pipelines and dependencies for vulnerable versions.

- Validate Parquet and Avro files before ingestion.

- Enforce automated scanning and guardrails in CI/CD.

What Happen?

CVE-2025-30065 is a new flaw in Apache Parquet, one of the most common storage formats in analytics, data engineering, and machine learning. The problem comes from unsafe handling of serialized data. Attackers can use this weakness to run their own code on the host as soon as the file is parsed.

The danger increases in Parquet Avro integrations. In this case, Avro schemas inside Parquet files can trick the system into loading harmful classes or forcing unsafe type changes. Consequently, a single malicious dataset in an ETL job, ML training run, or shared cloud storage can quickly put the whole environment at risk.

This vulnerability is not just another software bug. Instead, it creates a supply chain risk at the data layer. Pipelines that often process external or partner-provided Parquet files face the highest risk. Therefore, if teams do not patch quickly, attackers gain a direct path from one dataset to full remote code execution inside CI/CD jobs, data platforms, and cloud workloads.

Timeline of CVE-2025-30065 Disclosure

- February 19, 2025

Security researchers privately reported a flaw in the Apache Parquet parsing logic. - February 21 to 24, 2025

The Apache Parquet team validated the issue, confirmed the potential for remote code execution (RCE), and prepared patched versions. - February 25, 2025

Apache published an official advisory and assigned CVE-2025-30065 with a CVSS score of 9.8 (Critical). Fixed versions of Apache Parquet and Parquet Avro became available the same day. - February 26, 2025 and onward

Security vendors and cloud providers began publishing detection guidance. Proof-of-concept exploits appeared in canary testing tools, which raised concerns about rapid weaponization. - March 2025

Organizations started auditing their pipelines and storage layers for exposure. Data platforms and ML workflows quickly became high-value targets for exploitation.

2. Technical Breakdown: Why CVE-2025-30065 Matters

Vulnerability Vector

CVE-2025-30065 comes from unsafe deserialization and schema handling in Apache Parquet. When a crafted file is parsed, attacker-supplied metadata breaks normal execution and runs arbitrary code on the host.

Parquet Avro integrations are especially risky. Malicious Avro schemas hidden inside Parquet files can load attacker-controlled classes or force unsafe type changes.

In short, the flaw turns simple data ingestion into code execution. Therefore, any workflow that consumes untrusted Parquet files, whether from partners, public datasets, or shared cloud storage, can become a way in for attackers.

Real-World Attack Scenarios

- ETL and Data Pipelines

CI/CD jobs that transform incoming Parquet files may run malicious payloads. As a result, attackers compromise the runner and all connected systems. - Machine Learning Training Workloads

Harmful Parquet datasets can plant backdoors during preprocessing or steal secrets from ML environments. - Shared Cloud Storage

If one tenant uploads a poisoned dataset into a shared S3 bucket or data lake, the exploit can spread laterally across many workloads.

Why It Is Critical

- Low barrier to entry: An attacker only needs to place one crafted file in the data flow.

- High blast radius: Once executed, the exploit runs with the same permissions as the job, often including access to cloud keys, storage, or orchestration systems.

- Supply chain nature: This is not only a bug in one application. Instead, it is a broad weakness that puts the entire ecosystem using Apache Parquet and Avro at risk.

3. Executive Impact of CVE-2025-30065

According to the NVD advisory and the CVE.org record, this vulnerability has a CVSS score of 9.8 (Critical). That score highlights not only how easy it is to exploit but also how severe the consequences can be once a poisoned dataset enters the pipeline.

At the business level, the risks are significant:

- Data theft: Attackers may gain access to sensitive datasets, including financial or personal data.

- Pipeline takeover: Compromised ingestion jobs can give attackers a pivot into cloud accounts, code repositories, or production systems.

- Regulatory exposure: If the exploit leaks PII or regulated data, organizations may face non-compliance penalties under GDPR, HIPAA, or PCI DSS.

- Operational disruption: A single poisoned file can halt analytics jobs, delay ML workloads, and create cascading failures across cloud environments.

In short, CVE-2025-30065 is not just a bug in Apache Parquet. It represents a direct business and compliance risk with the potential to escalate quickly.

4. Affected Environments and Teams

CVE-2025-30065 does not only threaten data engineering teams. Because Apache Parquet and Parquet Avro are widely embedded across analytics, machine learning, and CI/CD, the blast radius is broad.

- Organizations using Apache Parquet directly: Any system parsing Parquet files (data warehouses, Spark clusters, or analytics tools) may be vulnerable.

- Data pipelines and ML workflows: ETL jobs or ML training pipelines that ingest external Parquet/Avro datasets are high-risk, since even one malicious file can compromise the environment.

- CI/CD jobs processing data: Automated build or test pipelines that parse Parquet or Avro may unknowingly execute attacker payloads during integration.

- Different environments, different risks: Development and test setups might only see disruption or leaks of sample data. In production, the risk jumps to stolen credentials, compliance violations, and full cloud account compromise.

The real danger lies in where the file gets consumed. A malicious dataset in a dev sandbox is an inconvenience. The same dataset parsed in production with access to cloud tokens becomes a critical incident.

# Find Parquet/Avro readers in code

grep -R "AvroParquetReader\|ParquetFileReader\|AvroSchema" -n src/ || true

# Flag unusually large or nested Parquet schemas

parquet-tools meta suspicious.parquet | grep "fields" | wc -l

# Detect ingestion logs with schema errors or deserialization failures

grep -R "AvroTypeException\|ClassCastException" logs/ || true

6. How to Mitigate CVE-2025-30065

The first step is to cut exposure right away. Teams should upgrade to the patched versions of Apache Parquet and Parquet Avro released on February 25, 2025, as noted in the Apache advisory. After patching, audit dependencies and SBOMs to confirm no vulnerable versions remain in builds or containers. Pause any job that ingests Parquet or Avro files automatically until the fix is in place. In addition, always treat external or partner datasets as untrusted and scan them before parsing. These actions close the immediate attack window and keep pipelines safer in the short term.

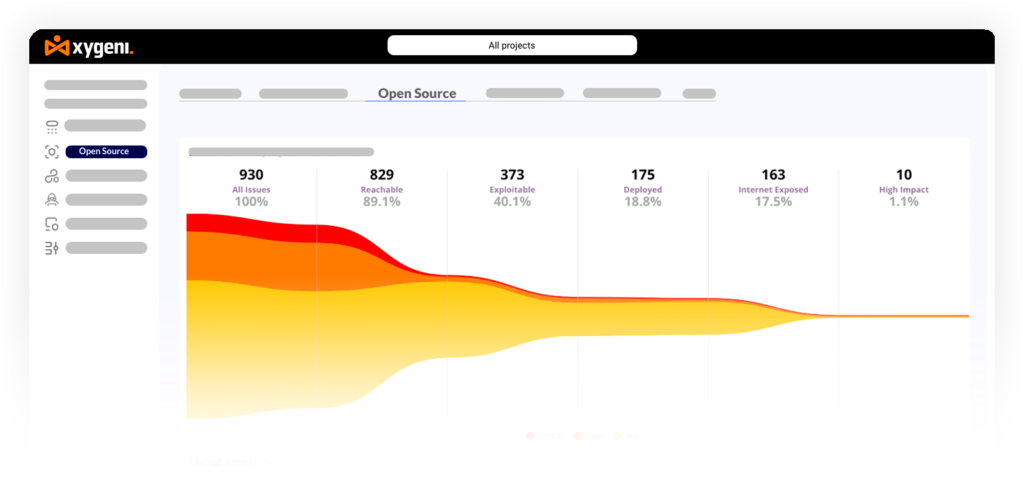

Looking ahead, organizations need guardrails that stop the next exploit before it spreads. This is where Xygeni adds value. The platform scans Parquet and Avro files before they reach a pipeline and blocks those with abnormal schemas, oversized metadata, or suspicious patterns. It also enforces safe versions of libraries, preventing developers from reintroducing vulnerable parquet-avro components. Moreover, Xygeni policies run as code, which means pipelines automatically reject unsafe ingestion paths and require attestations for high-risk formats.

Even if a malicious file executes, Xygeni limits the impact. It detects leaked credentials, rotates tokens, and alerts teams quickly. At the same time, anomaly detection spots strange ingestion activity, such as sudden spikes of new Parquet datasets or unusual schema changes, and raises alerts before damage grows. Finally, AutoFix with Remediation Risk speeds up secure patching by opening pull requests that upgrade parquet-avro to fixed versions and testing that upgrades remain stable.

Mitigation should work on two levels: prevention now and resilience later. Teams can block the exploit immediately by patching and auditing pipelines. Over time, Xygeni strengthens defenses by combining artifact scanning, dependency governance, secrets protection, anomaly detection, and automated fixes, ensuring pipelines stay secure against the next Parquet-style exploit.

8. Conclusion: Lessons Learned

CVE-2025-30065 shows that supply chain risks are not limited to code, even widely used data formats like Parquet and Avro can become attack vectors. The flaw underscores three lessons:

- Every dataset can be hostile. Treat incoming data with the same scrutiny as external code.

- Pipelines expand the attack surface. ETL jobs, CI/CD ingestion, and ML workflows are high-value targets for attackers.

- Resilience requires automation. Manual reviews cannot keep up with modern exploit timelines.

By combining patching, scanning, and programmable guardrails, organizations can defend against CVE-2025-30065 and future Parquet/Avro-style exploits.

At Xygeni, we believe the sustainable path is embedding supply chain security directly into pipelines, where data, code, and workloads intersect. With artifact scanning, dependency governance, and automated remediation, DevSecOps teams can stop data-driven RCE before it escalates into a breach