Introduction: Why Hugging Face AI Matters

Hugging Face AI has become one of the most influential platforms in artificial intelligence. It provides developers with access to pre-trained models, datasets, and collaborative tools that accelerate innovation. Knowing how to use Hugging Face correctly helps teams build faster, automate tasks, and experiment with large language models confidently.

However, open environments also create new risks. Misconfigured tokens, public repositories, or unsafe workflows can expose data. Therefore, integrating Hugging Face security from the start is essential. In this guide, we explain what the platform is, how to use it effectively, and how to secure your models step by step.

What Is Hugging Face AI?

Hugging Face AI is an open-source platform that provides access to thousands of machine learning models and datasets. It helps developers build, share, and deploy AI applications efficiently. By following good security practices, teams can use it safely to experiment with large language models and automate tasks in production environments.

Exploring the Hugging Face Ecosystem

The Hugging Face platform is a community hub for AI and machine learning. It hosts thousands of open-source models and datasets used for natural language processing, computer vision, and speech applications.

In addition, the ecosystem includes well-known libraries such as Transformers, Datasets, and Tokenizers, which simplify model development. For example, you can install everything in one line of code:

pip install transformersAs a result, developers can explore, test, and share models quickly. This flexibility is powerful, but to keep projects safe, applying Hugging Face security principles remains a priority.

How to Use Hugging Face in Daily Development

Learning how to use this platform starts with understanding its workflow. The process is simple, yet each step benefits from attention to safety.

- Install the library to access model hubs and pipelines.

- Load a pre-trained model and test it locally.

- Authenticate securely using personal tokens stored in environment variables.

For example:

from transformers import pipeline

model = pipeline("text-generation", model="gpt2")

print(model("Welcome to Hugging Face AI"))

Moreover, always verify model sources before using them in production. Unverified uploads might contain malicious code. Consequently, following basic Hugging Face security rules, such as scanning files and using private tokens, prevents potential breaches.

Common Use Cases and Applications

Hugging Face supports a wide range of AI tasks. Developers use it for text classification, translation, sentiment analysis, and image generation, bridging research and practical deployment.

The platform also enables companies to fine-tune large models, share them publicly, and integrate them into APIs or mobile applications, making it one of the fastest ways to prototype and deploy intelligent services.

When sharing resources publicly, always verify permissions and licenses. Following strong security practices allows collaboration without losing control of your data.

Downloading and Running Models Securely

Many developers prefer to run models locally for privacy or performance reasons. You can download them easily:

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

model = AutoModel.from_pretrained("bert-base-uncased")

Before executing, confirm that the model comes from a verified source. In addition, scan the repository for hidden scripts or dependencies. Storing authentication tokens securely and isolating environments in containers ensures that local setups follow Hugging Face security standards.

Creating a Custom GPT with Hugging Face

One of the most exciting features of Hugging Face AI is the ability to fine-tune custom GPT-style models. You can start with an existing model, train it with your dataset, and deploy it through the Inference API or Spaces.

When building private models, remember to:

- Keep repositories private during training.

- Limit token scopes to the minimum required.

- Monitor API usage regularly.

These actions help maintain strong Hugging Face security and prevent unintentional leaks or misuse. As a result, your custom LLMs remain both performant and compliant.

Is Hugging Face Free

Yes, Hugging Face AI offers a free tier for open-source use. Developers can host public models and datasets without cost, while advanced features, uch as private models, managed inference, or enterprise support, are available under paid plans.

Security policies apply to every tier, so reviewing the documentation and terms helps ensure compliance when deploying at scale.

You can find full details on its official security page.

Practical Ways to Keep Your Projects Secure

Effective Hugging Face security goes beyond credentials. It involves checking the integrity of every component that interacts with your workflow.

Follow these essential guidelines:

- Enable two-factor authentication on all accounts.

- Use private repositories for sensitive content.

- Review model cards and datasets for possible data exposure.

- Rotate API keys frequently.

- Integrate automated scanning into CI/CD pipelines.

In addition, adopting frameworks such as the NIST AI Risk Management Framework ensures that your AI development aligns with industry standards. Consequently, your models stay both transparent and trustworthy.

Ownership and Community Behind Hugging Face

It was founded in 2016 by Clément Delangue, Julien Chaumond, and Thomas Wolf. The company is privately held and supported by leading investors, while maintaining a strong open-source community.

Because the platform grows rapidly, maintaining consistent security practices becomes even more important. The collaboration between contributors and researchers thrives when everyone applies responsible Hugging Face security habits.

Is Hugging Face Safe to Use

Yes, it is safe when used correctly. The platform enforces encrypted connections, secure tokens, and verified accounts. However, safety depends on user behavior. For instance, avoid running code from unknown repositories and always inspect workflow files before execution.

By combining platform safeguards with your own checks, you strengthen overall Hugging Face security and protect your AI projects from supply chain attacks.

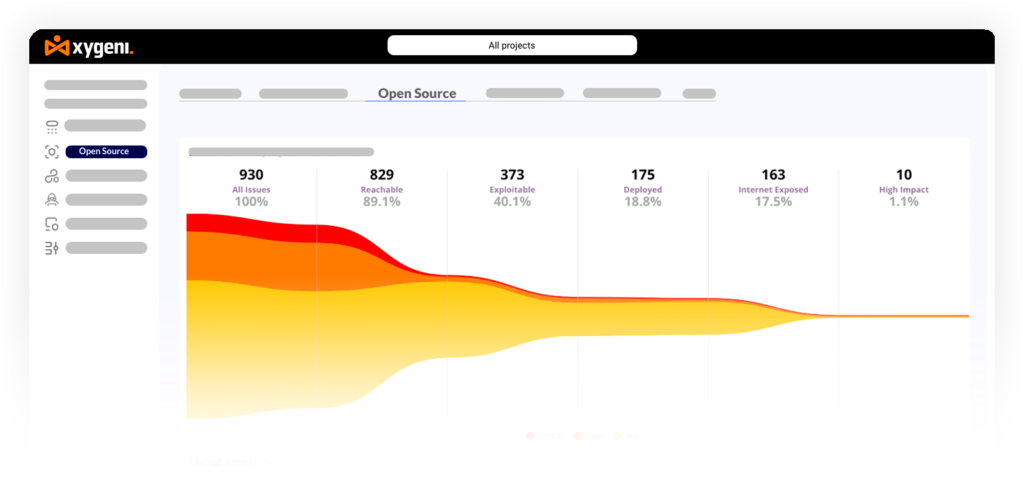

How Xygeni Helps Secure Hugging Face AI Workflows

Hugging Face AI is powerful, but keeping it safe requires visibility and guardrails across your development pipeline. Xygeni helps you secure Hugging Face projects from code to deployment by scanning every stage automatically.

Here’s how it strengthens your workflow:

Protects access tokens and secrets

Xygeni scans repositories, pipelines, and configuration files to detect exposed tokens, API keys, and credentials before they reach production. It can also block merges automatically if sensitive data is found.

Scans dependencies and model files for malicious code

Many shared models include dependencies or scripts that may introduce risk. Xygeni analyzes those packages, detects malware or supply-chain tampering, and alerts your team before installation.

Enforces secure CI/CD practices

When using Hugging Face inside automated pipelines, Xygeni validates each job configuration. It checks for unsafe commands, weak permissions, and missing validation steps to ensure your builds run safely.

Applies policy guardrails

Teams can define custom rules such as “no unverified models,” “no public datasets with secrets,” or “no missing encryption.” These guardrails enforce security automatically across your repositories.

Automates remediation with AI Auto-Fix

When a vulnerability or misconfiguration appears, Xygeni suggests safe patches or generates ready-to-merge pull requests, saving valuable developer time.

By integrating Xygeni into your workflow, Hugging Face projects become safer by default. Developers maintain speed and flexibility, while every model, dataset, and pipeline step complies with strong security standards.