MCP security is now a top priority for DevSecOps teams working with large language models. The model context protocol (MCP) allows LLMs to connect directly with developer tools, local environments, and CI/CD systems, enabling powerful automation but also creating new risks. As this connection grows deeper, applying strong controls through MCP server security best practices becomes essential. Without proper safeguards, an AI assistant could expose secrets, execute unsafe commands, or change production dependencies unintentionally.

This article explains how the model context protocol works, which vulnerabilities it introduces, and how to secure MCP servers effectively. It also shows how Xygeni helps DevSecOps teams detect unsafe AI–tool interactions, enforce guardrails, and keep automation safe across every stage of the development lifecycle.

What Is the Model Context Protocol (MCP)?

What Is the Model Context Protocol (MCP)?

The model context protocol defines a communication layer between an LLM and external developer tools. Instead of responding only with text, the model can now send structured requests to connected systems. For example, it can call an API, open a file, or retrieve logs from a build pipeline.

In practical terms, MCP lets an LLM become an “active” assistant inside the development environment. When a developer asks the model to run a test, check dependencies, or scan a container, the LLM sends that request through the MCP interface. The connected MCP server receives it and executes the task using authorized local tools.

This interaction saves time and reduces context switching. However, it also exposes the model to sensitive resources such as local file paths, credentials, and system commands. As a result, MCP security must ensure that the AI can interact safely without crossing predefined boundaries.

How MCP Servers Work in LLM–DevOps Integrations

In a typical setup, the MCP server acts as a secure bridge between the LLM and the developer’s environment. It interprets model requests, validates them, and forwards them to trusted tools such as VS Code, GitHub Actions, or a testing framework.

Each request includes context, like what the model wants to access and why. The server then decides whether the action is allowed. Ideally, an MCP security layer validates this context to avoid unwanted operations.

For example:

- When the model asks to open a local file, the MCP server checks path permissions.

- If it wants to install a package, the server validates the source and version.

- When a command touches a production branch, the server can require human approval.

These checks form the foundation of MCP server security best practices, the guardrails that prevent models from performing actions outside their safe zone.

Key Risks in MCP Security

While the model context protocol improves automation, it also introduces several attack surfaces. The following are the most relevant risks to watch closely:

- 1. Local Exposure: If an MCP server lacks isolation, an LLM could access local files, environment variables, or sensitive data unintentionally. This is one of the most common MCP security failures.

- 2. Secret Leakage: An insecure configuration may expose tokens, API keys, or credentials through prompts or responses. These leaks can quickly spread through logs or model memory.

- 3. Command Injection: Because LLMs generate text, a crafted prompt could trick the model into sending a harmful command. Without validation, the MCP server might execute it.

- 4. Dependency Tampering: Some MCP setups allow the AI to install or update dependencies automatically. If not verified, a malicious package could compromise the local environment.

- 5. Overprivileged Access: Granting the AI full system permissions can lead to uncontrolled execution or lateral movement. Restricting privileges is one of the core MCP server security best practices.

Each of these risks shows that the model context protocol must be treated as part of the organization’s security perimeter. The same principles that protect APIs or cloud workloads now apply to AI–DevOps integrations.

MCP Server Security Best Practices

To build secure and reliable MCP integrations, teams should apply layered protection. The following MCP server security best practices can help prevent most common incidents:

| MCP Server Security Best Practice | Description |

|---|---|

| Validate and Sanitize All Requests | Never execute model requests directly. Each call must go through validation rules that check syntax, intent, and target scope. |

| Limit Filesystem and Network Access | Restrict the model’s visibility to specific directories or endpoints. Isolation prevents data leaks and limits lateral access. |

| Apply Permission Controls | Define which tools, APIs, and repositories the model can use. Fine-grained access control keeps AI activity predictable and secure. |

| Use Containerization or Sandboxing | Run each MCP session inside an isolated environment. This prevents cross-contamination between builds or users and limits potential impact. |

| Monitor and Audit Activity | Keep detailed logs of every model action, command, and response. Monitoring supports early incident detection and compliance verification. |

| Rotate Tokens and Separate Credentials | Store model credentials separately from development keys. Frequent token rotation lowers the risk of reuse or unauthorized access. |

When implemented together, these MCP server security best practices create strong guardrails that let teams benefit from model context protocol automation without exposing core systems

Xygeni’s Perspective on MCP Security

At Xygeni, security teams see the model context protocol as both a breakthrough and a new frontier for DevSecOps. The same AI that speeds up code review can also expand the attack surface if not controlled.

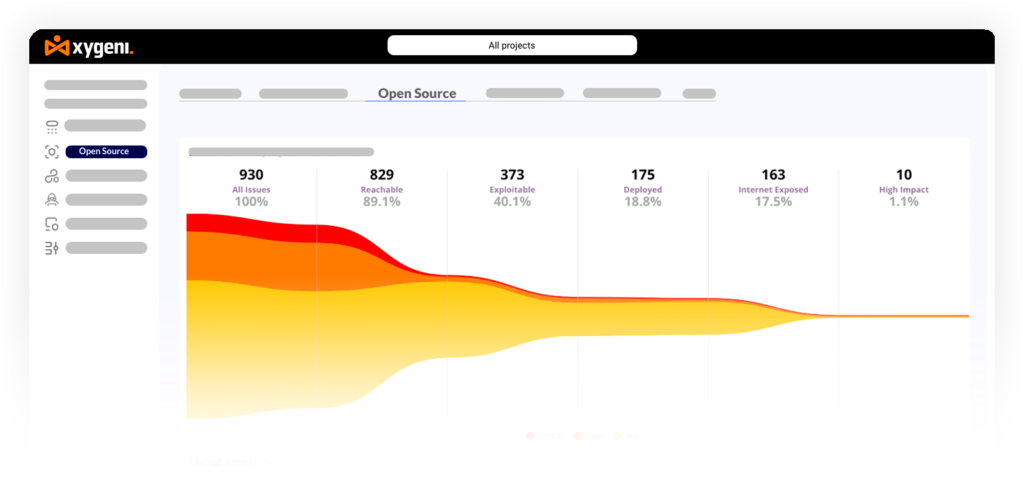

Xygeni helps organizations manage this new risk by analyzing how LLMs interact with their development pipelines. The platform detects unsafe patterns, such as secrets shared through AI prompts or model commands that reach protected environments. It also applies guardrails that block unsafe actions, restrict unauthorized commands, and enforce least privilege across MCP connections.

Through continuous monitoring and contextual analysis, Xygeni provides clear visibility into every AI–DevOps interaction. This makes it easier for teams to trust their AI tools and ensure that automation happens safely inside the pipeline, not outside of it.

The Future of MCP Security

The rise of LLMs in developer tools will only accelerate. Soon, most IDEs, build systems, and repositories will support the model context protocol by default. This shift will bring massive productivity gains, but also a new responsibility for security teams.

As more AI systems connect directly to source code and infrastructure, MCP security must become part of the standard DevSecOps workflow. Developers will need visibility, policy enforcement, and continuous assurance that their AI assistants stay within limits.

The organizations that adopt MCP server security best practices today will lead this transition safely. They will harness AI’s speed without sacrificing control or trust.

Final Thoughts

The model context protocol turns large language models into active participants in software development. It connects AI directly to the tools developers rely on every day. However, every new connection expands the attack surface.

By applying strict MCP security controls and following proven MCP server security best practices, teams can unlock the benefits of AI-driven automation while maintaining full control.

Xygeni helps organizations achieve exactly that balance. Its platform integrates seamlessly with modern CI/CD environments to detect risky AI–DevOps flows, enforce policies, and ensure that every AI action happens securely by design.

Start Free Trial! Protect Your AI–DevOps Integrations with Xygeni

About the Author

Written by Fátima Said, Content Marketing Manager specialized in Application Security at Xygeni Security.

Fátima creates developer-friendly, research-based content on AppSec, ASPM, and DevSecOps. She translates complex technical concepts into clear, actionable insights that connect cybersecurity innovation with business impact.