Introduction: Why MCP Servers Matter in AI Projects

MCP servers are becoming a key component in modern AI systems. As more teams build agent-based workflows and connect large language models to internal tools, the mcp server and the model context protocol are quickly turning into core infrastructure for any serious mcp ai project.

At a high level, the model context protocol defines how an AI model receives structured context from external sources such as files, APIs, or internal services. However, the mcp server is the part that makes this possible in practice. It acts as the control layer between the model and the tools it can access. Because of this, it directly affects how data flows, how permissions are applied, and how much trust the system requires.

As a result, MCP servers unlock powerful new capabilities. Developers can give AI agents real awareness of codebases, documents, or operational systems. At the same time, this flexibility introduces new risks. If context is exposed, shared incorrectly, or manipulated, an AI system can leak data, misuse tools, or act outside its intended scope.

Therefore, understanding how MCP servers work is no longer optional. It is essential for anyone building AI platforms, internal copilots, or production-grade agent workflows. Moreover, teams must think beyond functionality. Security, access control, and visibility need to be part of the design from the very beginning.

In this guide, we answer the most common questions developers ask about MCP servers, the model context protocol, and real-world mcp ai projects. You will learn what these components do, how they work together, and what risks they introduce. Most importantly, you will see how to approach MCP security in a practical way, without slowing down development.

What is an MCP server?

An MCP server is a service that connects AI models to external tools, data sources, and systems in a controlled and auditable way. It follows the Model Context Protocol, which defines how models request and receive context when they interact with the outside world.

In practice, an MCP server works as a secure bridge. The AI model does not access files, APIs, databases, or internal services directly. Instead, it sends structured requests to the server. The server reviews each request, applies clear rules, and returns only the approved data or action.

This controlled flow gives teams clear visibility over AI behavior. It also reduces risks such as data leaks, unsafe commands, or uncontrolled access. These protections matter even more when AI agents run inside developer tools, CI/CD pipelines, or production systems.

What is the Model Context Protocol?

The Model Context Protocol is a standard that defines how AI models receive context from external systems in a safe and predictable way. Rather than exposing tools or data directly, the protocol limits what the model can request and how systems respond.

In simple terms, the protocol works like a contract. It states which actions the AI can perform and what context it may access through an MCP server. This replaces implicit trust with explicit rules.

From a security perspective, this matters because context gives power. Too much context can expose sensitive data or trigger unwanted actions. By using the Model Context Protocol, teams set clear boundaries and keep AI workflows under control as they grow.

What is an MCP server in an AI context?

In an AI context, an MCP server enforces the Model Context Protocol during real interactions between models and external systems. It becomes the main control point for how an AI model receives and uses context.

Instead of trusting the model with direct access, the MCP server checks every request. It applies permissions and policies before sending a response. As a result, the model only receives the minimum context needed to complete its task.

From an AppSec view, this design plays a key role. The MCP server limits data exposure, blocks unsafe tool use, and creates a clear audit trail. Therefore, teams can integrate AI into real workflows while keeping control over access and behavior.

What is an MCP AI project?

An MCP AI project is an implementation that uses MCP servers and the Model Context Protocol to connect AI models with real systems in a controlled way. These projects usually involve AI agents that need access to code repositories, APIs, documents, or internal services.

Instead of hard-coding permissions or relying on implicit trust, an MCP AI project defines explicit rules for context access. This makes the system easier to review, test, and reason about as it grows.

From an AppSec perspective, MCP AI projects are important because they introduce security boundaries early. They help teams avoid exposing sensitive systems once AI agents move closer to production workflows.

What are MCP servers?

MCP servers are services that act as intermediaries between AI models and the external resources they need to use. Each server follows the Model Context Protocol and applies rules about what data or actions are allowed.

In practice, organizations often run multiple MCP servers. One may expose source code, another documentation, and another cloud APIs. This separation limits impact and improves visibility.

Because MCP servers centralize access control, they also become natural points for logging, auditing, and security checks across AI workflows.

How does MCP work?

MCP works through a structured request and response flow between an AI model and an MCP server. When the model needs data or wants to perform an action, it sends a request that clearly describes its intent.

The MCP server evaluates that request, applies permissions and policies, and returns only the approved context. The model never interacts directly with the underlying system.

This approach reduces data exposure and helps prevent unintended side effects during AI-driven operations.

How do MCP servers work?

MCP servers receive structured requests from AI models and translate them into controlled interactions with external systems. They act as policy enforcers rather than simple proxies.

Each request is checked against predefined rules, such as allowed actions, data scope, or execution limits. Only after these checks does the server fetch data or trigger a task.

Because of this layered flow, MCP servers make AI behavior easier to understand and safer to operate in sensitive environments.

How to build an MCP server?

Building an MCP server starts with implementing the Model Context Protocol specification and defining clear boundaries around what the AI model can access. This includes deciding which tools are exposed and what data is available.

Teams usually wrap existing APIs or services behind the MCP server instead of exposing them directly. This keeps scope tight and rules consistent.

For real use cases, it is also important to add logging and access controls so every AI request can be reviewed later.

How to create an MCP server?

To create an MCP server, you first identify the systems your AI model needs to interact with. Then, you expose only the required actions or data through MCP endpoints.

Each endpoint should check inputs carefully and return minimal responses. This limits what the model can see and reduces the risk of misuse.

Over time, teams often expand MCP servers step by step, adding new capabilities only after reviewing their security impact.

What is the MCP Model Context Protocol?

The MCP Model Context Protocol is the formal specification that defines how context is shared between AI models and external systems. It describes request formats, response structures, and expected behavior.

By following a shared protocol, teams avoid custom integrations that are hard to secure or audit. Every interaction follows the same predictable pattern.

This consistency becomes especially valuable when multiple AI agents rely on shared infrastructure.

Who created MCP?

The Model Context Protocol was introduced by Anthropic as part of its work on safer and more controllable AI systems. The goal was to limit unrestricted access while still enabling useful tool integration.

Although it originated from Anthropic, MCP is designed to be vendor-agnostic. Different models, tools, and platforms can adopt it without being tied to one provider.

As adoption grows, MCP is increasingly seen as a foundation for secure AI agent architectures.

Is MCP secure by default?

MCP provides a strong structure, but security still depends on how MCP servers are implemented and managed. The protocol defines boundaries, yet teams must configure permissions and rules carefully.

If servers expose too much data or allow broad actions, the benefits are reduced. Used correctly, MCP offers much stronger control than direct model access.

In practice, MCP works best when combined with secure development practices, regular reviews, and automated scanning.

How to Secure an MCP Server

Securing an MCP server starts by treating it as part of your attack surface. It sits between AI models and real systems, so every request matters.

First, limit access strictly. Each MCP endpoint should expose only the minimum data or action required. Avoid broad permissions and split servers by domain when possible.

Next, review and log every request. Clear rules, basic validation, and audit logs help teams understand what the AI is doing and why.

Finally, secure the MCP server like any other production service. Its code, dependencies, infrastructure, and pipelines need continuous protection.

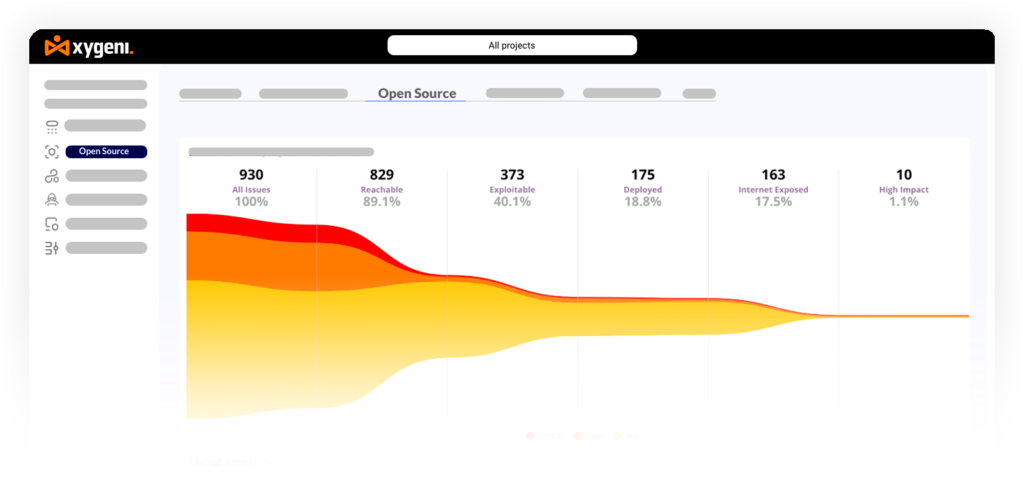

How Xygeni Strengthens MCP Server Security

Xygeni secures everything around the MCP server, without replacing it.

It scans MCP server code and dependencies, detects exposed secrets, and reviews IaC used to deploy MCP services. In addition, it enforces guardrails in CI/CD to block risky changes before release.

Xygeni also integrates with IDEs, copilots, and AI agents, giving teams early security feedback while MCP servers evolve.

Together, MCP controls what AI can do, and Xygeni ensures the system behind it stays secure.

Conclusion

MCP servers and the Model Context Protocol give teams a safer way to connect AI models with real systems. They replace direct access with clear rules and controlled context, which reduces risk from the start.

However, MCP alone is not enough. MCP servers still run code, use dependencies, and live inside pipelines and cloud environments. For that reason, they need the same AppSec attention as any other production service.

By combining MCP with automated security around code, dependencies, infrastructure, and CI/CD, teams can scale AI safely. This approach lets developers move fast, while keeping control over access, behavior, and security.

About the Author

Written by Fátima Said, Content Marketing Manager specialized in Application Security at Xygeni Security.

Fátima creates developer-friendly, research-based content on AppSec, ASPM, and DevSecOps. She translates complex technical concepts into clear, actionable insights that connect cybersecurity innovation with business impact.