Giving the keys of your house to criminals is definitely not the best idea. But that is what often occurs in most organization developing modern software.

In this first post about secrets leaks, we will analyze why this happens so often, what are the consequences, and what actions to take to prevent or mitigate the problem and handle secrets leak incidents.

OMG! I pushed my cloud access keys to a public repo

Hard-coded secrets in source code or configuration files in DevOps tools may end in the bad hands. If the secret is committed in a public sources repository, you are doomed for sure. But even private repositories are not safe, as secrets are also leaked through application binaries, logs, or stolen source code.

In retrospect, it is disturbing how often a simple oversight has led to a serious security breach. Just google “AWS key leaks“, “GitHub access token leaks“, and so on. Don’t take these examples as hidden recommendations for this or that vendor. Use your own !

For example, the (in)famous Codecov attack of April 2021 was possible because the Codecov Docker image contained git credentials that allowed an attacker to gain access to Codecov’s private git repositories and add a single line in Codecov’s bash uploader script for collection environment variables and git repository URLs.

Remind that part of the bounty in attacks are the secrets to gain access to additional systems, and many attacks invest heavily on credentials, crypto keys and tokens exfiltration.

The problem is that hard-coded secrets are common place. In March 2022 the Lapsus$ APT leaked 189GB of Samsung’s source code and other sensitive files. Analysis revealed it contained some 6,600 hard-coded secrets: 90% for internal systems, but 10% for external services and tools like GitHub, AWS or Google. Those secrets included AWS / Twilio / Google API keys, database connection strings, and other sensitive information. This is the state-of-the-art in most code bases.

Secrets leaks are the easiest path to supply chain attacks

Package dependencies are currently the most frequent albeit not the unique target for supply chain attacks. The bad guys could create a new package that ends installed into victims’ software (using typosquatting and other techniques), but typically they try to infect an existing package either by adding modifications to the source code in software repositories (SCM) like GitHub, GitLab or BitBucket, or by adding malicious versions to public registries like NPM, PyPI, RubyGems, Maven Central.

But injecting malicious code or a malicious dependency hidden in an intricate dependencies graph require login credentials like username/password, tokens or access keys (let’s call them “keys” for short), for the target source repository or public registry, respectively.

The bad guys sometimes gain the keys via social engineering. The attack to the event-stream popular NPM package provides a nice example. But searching for leaked login credentials or access keys is the most frequent attack technique for software supply chain attacks.

Source repositories and package registries are two essential systems in the software build pipeline. But there are many tools in DevOps: CI/CD systems, tools for running tests, configuration & provisioning automation, or deployment & release. All of them can be abused for injecting malicious code in software. Leaking valid keys for these tools leads directly to misery and agony. Imagine leaking root access keys keys with full control on your public cloud resources…

The usual recommendations

We do not say anything new here, you all know this. But take action ! Remember that bots are regularly scanning all public SCM repositories. Some recommendations, in no particular order.

- If you have responsibility on IT security management, define how secrets should be handled in the security policy. But policies are only as good as enforcement: ensure that a secrets handling guideline is enforced in your organization -including not only your DevOps teams but also your software suppliers, and that your organization’s incident response plan contains provisions for secrets leak incidents.

- Implement and enforce multi-factor authentication (MFA, 2FA or whichever acronym). And no cutbacks in security: an USB security key is worth the few bucks it costs. You have to git-push any of your thousands credentials (easy) and then get drunk and leave your keys in a bar with something linking to you (odds are just a bit smaller, particularly if you are teetotaler).

- Use a password manager with a strong, unsaved password. For handling secrets in systems use Secret Vaults. CI/CD systems, cloud providers, SCMs and other DevOps tools provide this service, but you may opt for a generic Secret Vault solution.

- Prefer short-lived tokens to long-lived access keys. They are easier to revoke and expose a more limited window to the evil.

- Limit credential reuse: attackers will reuse harvested credentials for a target into other systems, another point for using a password manager. Password managers and secret vaults should make credential reuse a thing of the past.

- Limit and monitor the use of admin passwords. They are powerful enough to deserve special tracking.

- Implement strong hashing and encryption. Back to USB (cryptographic) keys, strict procedures for transmitting credentials with partners and co-workers, and so on.

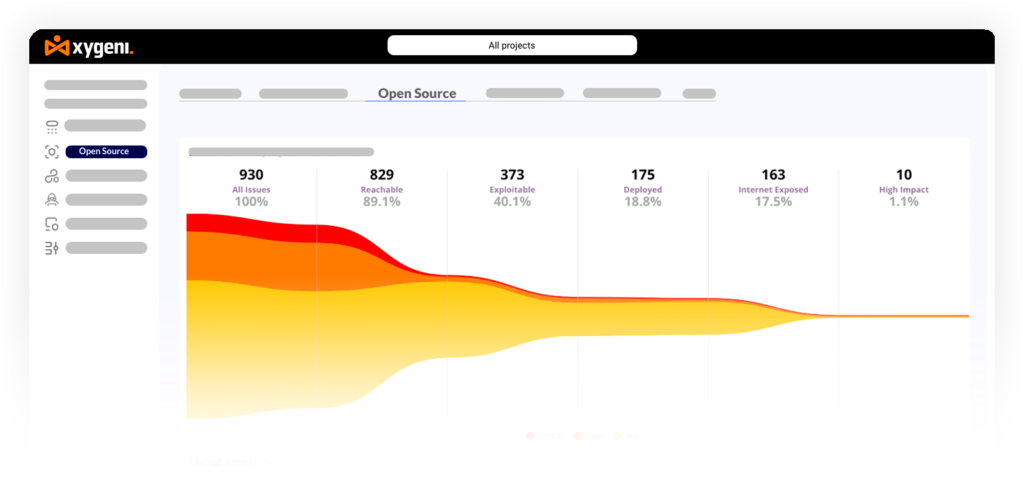

- Use a secrets scanner, for example run in a pre-commit hook to avoid leaks in version control systems, as a security gate. Before the thing is important here. Alternatively, use post-hoc scans to detect leaked secrets for example as a check before pull request merges. Note: Our Xygeni platform includes a secrets scanner allowing both modes of operation.

- The manual alternative of using code reviews to search for hard-coded secrets has higher costs and works post-commit (but hopefully at least before the secret is available to outsiders). But reviews may detect non-conventional secrets that might evade secrets scanners.

- Avoid accidentally committing common files with secrets to version control with appropriate exclude patterns (like `gitignore` template), taking into account files like

.env,.npmrc,.pypirc, temporary files… An additional layer in the security onion indeed. - And the last in this long list: let the cloud providers perform scans for their keys’ leakages, when available. At least, this may let you know on the leak when happened, but security is a must for cloud providers. This post-hoc secret scanning is not much transparent regarding where and how often the scan is performed, and often needs explicit setup, but certainly is the last resource when everything else fails.

OMG! I pushed my cloud access keys to a public repo, take #2

That could happen to the best of us. Roll up your sleeves !

Renew / revoke / disable immediately the leaked secret ! If the account has decent MFA then risk is much lower. That could be more difficult with e.g. private keys in websites (you need to emit a new certificate for a new private key and revoke the existing), but modern tools have a quick way to renew credentials or revoke tokens.

Follow the steps recommended by the provider when available, like AWS in this example.

Identify the cause of the leak. Knowing how it happened is essential for disclosure, analysis, containment and lessons-learned activities.

Then report the leak to the affected parties, explaining the actions you are taking to plug the leak and reduce damage. There is no way to reverse the damage done, what was leaked is leaked. Be transparent and inform others so they could take action.

Then begin with the forensics. The exposure window is the time between the leak and the time when the secret was not valid. Be ready to read logs and track for unusual activity with the affected account during that window. Remove accounts and keys generated using the affected account. Remind that if the affected account has admin privileges, fix is much more complex.

Rewriting (version control) history is complex. Even totalitarian states try this to no avail (pun intended.) And probably irrelevant: the hackers or the bots on public repos might have cloned the repo or had already extracted the gold, in particular if the exposure window is large enough.

If you are adventurous and want to see by yourself how long it takes for bots to detect a leaked secret, tripwires like Canary Tokens let you experiment. Remember, bots blacklist the default canarytokens.org domain…

| To read moreKovacs, E. “Thousands of Secret Keys Found in Leaked Samsung Source Code“. Security Week, Mar 2022. Dyjak, A. “Couple of days ago I conducted a small experiment WRT secrets commited to public git repositories…“. Tweet thread, Nov 2020.Rzepa, P. “AWS Access Keys Leak in GitHub Repository and Some Improvements in Amazon Reaction“. Medium, Nov 2020. |