The discussion around AI BOM did not emerge from academic curiosity. It surfaced because security teams started losing visibility. As machine learning models, foundation models, and AI-assisted code generation entered production systems, traditional software inventories stopped being sufficient. You could list packages, containers, and libraries, yet still have no idea which models were embedded, where training data came from, or which external APIs were shaping runtime behavior. This is the precise gap the AI Bill of Materials is meant to address. Before going further, let’s establish a clear baseline.

Deep dive into AI Bill of Materials #

What is an AI BOM? An AI BOM (short for AI Bill of Materials) is a structured inventory that documents all AI-related components used within a system. This includes models, datasets, training frameworks, inference engines, third-party APIs, open-source dependencies, and configuration artifacts that influence how AI behaves at build time and runtime. If a Software Bill of Materials (SBOM) answers “what code is inside this application,” an AI Bill of Materials answers a more complex question: what intelligence is embedded here, where did it come from, and what risks does it introduce? An AI BOM does not replace an SBOM. It extends it into areas where traditional dependency tracking fails, particularly around opaque models, external AI services, and continuously evolving artifacts.

Why the AI BOM Exists as a Separate Concept? #

Security teams initially attempted to stretch SBOMs to cover AI assets. That approach fails quickly. Models are not libraries. Training datasets are not packages. Prompt templates are not static configuration files. An AI BOM exists because AI systems introduce risk dimensions that SBOMs were never designed to capture.

When teams ask what is an AI BOM, they are often reacting to one of the following realities:

- A model was pulled from a public registry with unknown provenance

- Training data included licensed or sensitive material

- An external LLM API changed its behavior without notice

- A model update introduced bias, leakage, or unsafe outputs

The AI Bill of Materials provides traceability for these scenarios, which is why it is increasingly referenced in AI security, governance, and compliance discussions.

Core Components Documented in an AI BOM #

An AI BOM is only useful if it is specific. While implementations vary, mature AI Bill of Materials structures consistently document the following categories.

Models and Model Artifacts #

This includes model name, version, architecture, source repository or vendor, checksum or hash, and deployment context. Without this, incident response becomes guesswork.

Training and Fine-Tuning Data #

An AI BOM captures datasets used for training or fine-tuning, including origin, licensing constraints, and sensitivity classification. This is critical for regulatory exposure and intellectual property risk.

Frameworks and Toolchains #

TensorFlow, PyTorch, inference runtimes, optimization libraries, and model converters are included here. From a security standpoint, these are executable dependencies with the same malware and vulnerability risks as traditional code.

External AI Services and APIs #

Any reliance on third-party AI services must be listed in the AI Bill of Materials, including provider, usage scope, data flows, and update cadence.

Configuration and Prompt Assets #

Prompts, guardrails, and policy layers materially affect AI behavior. An AI BOM treats them as first-class assets, not comments in a repository.

How an it Supports Emulating Secure Development Practices #

Security professionals often assume that existing controls naturally extend to AI. They do not. This misconception mirrors earlier mistakes made with open-source supply chains.

An AI BOM enables controls that otherwise collapse under complexity:

- Risk assessment tied to specific models and data sources

- Faster containment when an AI component is compromised

- Enforced governance over shadow AI usage

- Clear ownership of AI-driven functionality

When teams ask what is an AI BOM, the practical answer is simple: it is the minimum artifact required to treat AI systems as auditable software components instead of black boxes.

Common Misconceptions #

Misconception #1: “We already track dependencies, so we have an AI BOM.”

Tracking Python packages does not tell you which model weights were loaded, which dataset-shaped outputs, or whether an inference endpoint calls an external provider. An AI BOM is not inferred; it must be explicitly generated and maintained.

Misconception #2: “AI BOMs are only for regulated industries.” #

Regulation accelerates adoption, but security incidents drive necessity. Model poisoning, prompt injection, data leakage, and malicious model updates affect every organization deploying AI. The AI Bill of Materials is a defensive control, not just a compliance artifact.

Misconception #3: “Model providers handle this risk for us.” #

External providers reduce operational burden, not accountability. If your system consumes AI outputs, you own the risk. An AI BOM documents that dependency so it can be governed instead of ignored.

AI BOM vs SBOM: Why Both Are Needed? #

This comparison matters for DevSecOps teams trying to avoid tool sprawl. An SBOM inventories software components. An AI BOM inventories intelligence components. Overlap exists, but substituting one for the other leaves blind spots. Together, they provide a complete picture of supply chain risk.

That is why industry guidance from vendors increasingly position the AI Bill of Materials as complementary, not optional.

Operationalizing an AI BOM in DevSecOps #

An AI BOM should not live as static documentation. It must integrate into the SDLC. Effective implementations generate and update it during:

- Model onboarding

- CI/CD execution

- Deployment and runtime changes

This allows security teams to answer questions quickly when something goes wrong, instead of reconstructing AI lineage after an incident.

Why AI BOMs Matter for Incident Response? #

When a vulnerability or malicious behavior is discovered in an AI model or framework, time matters. Without an AI BOM, teams cannot reliably answer:

- Which applications are affected

- Which environments are exposed

- Whether sensitive data was involved

The AI Bill of Materials compresses response time by turning unknowns into searchable facts.

The Role of AI BOMs in AI-First AppSec #

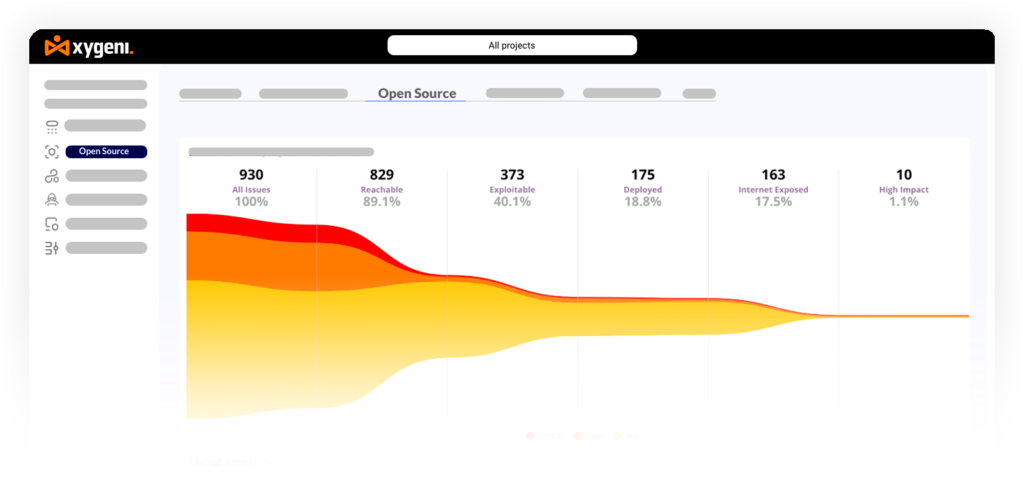

As AI becomes embedded across development, security tooling must evolve. Platforms that already provide SBOMs, malware detection, and dependency intelligence are now extending visibility into AI components. This is where platforms like Xygeni align naturally with the AI BOM concept. By correlating AI-related artifacts with code, dependencies, pipelines, and runtime behavior, AI BOMs stop being theoretical diagrams and become actionable security controls.

An AI BOM combined with real-time malware detection, SCA, CI/CD security, and ASPM enables teams to manage AI risk without slowing delivery. That is the practical endgame: visibility without friction.

Final Thoughts: Why “What Is an AI BOM” Is the Right Question #

Asking what is an AI BOM is not about definitions. It is about recognizing that AI systems are now part of the software supply chain and that unmanaged supply chains fail. The AI Bill of Materials, gives DevSecOps teams the same leverage over AI that SBOMs brought to open source. Not perfect control, but enough visibility to make informed decisions, respond quickly, and reduce avoidable risk. That is why the it is not a trend. It is a correction.